Animate Samples from a Gauss-Markov Posterior¶

We assume you’ve read the tutorial about animating a Gaussian distribution. This tutorial will explain how to use the animate_with* functionality in conjunction with the sampling routines in ProbNum.filtsmooth to animate a sample from a Gauss-Markov posterior.

[1]:

from probnumeval import visual

import numpy as np

import matplotlib.pyplot as plt

import probnum as pn

plt.style.use("../probnum-evaluation.mplstyle")

np.random.seed(42)

Let us start by constructing a simple filtering problem.

[2]:

locations = np.linspace(0, 2*np.pi, 100)

data = 0.5 * np.random.randn(100) + np.sin(locations)

We fit a once-integrated Brownian motion prior to this data.

[3]:

prior = pn.statespace.IBM(1, 1)

measmod = pn.statespace.DiscreteLTIGaussian(state_trans_mat = np.eye(1, 2), shift_vec=np.zeros(1), proc_noise_cov_mat=0.25 * np.eye(1))

initrv = pn.randvars.Normal(np.zeros(2), np.eye(2))

kalman = pn.filtsmooth.Kalman(prior, measmod, initrv)

regression_problem = pn.problems.RegressionProblem(observations=data, locations=locations)

posterior, _ = kalman.filtsmooth(regression_problem)

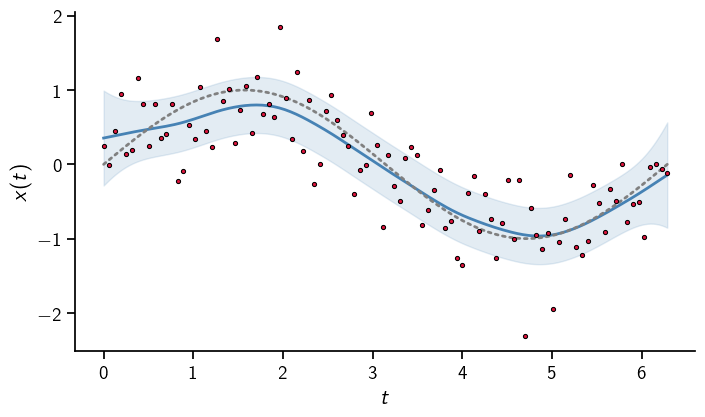

Let us start by visualizing this output

[4]:

t = np.linspace(0, 2*np.pi, 200)

mean = (posterior(t).mean @ np.eye(2, 1)).squeeze()

std = np.sqrt(np.eye(1, 2) @ posterior(t).cov @ np.eye(2, 1)).squeeze()

[5]:

fig, ax = plt.subplots(dpi=100)

ax.plot(t, mean, color="C0")

ax.plot(t, np.sin(t), color="gray", linestyle="dotted")

ax.fill_between(t, mean - 3*std, mean + 3*std, alpha=0.15, color="C0")

ax.plot(locations, data, marker=".", linestyle="None", color="C1")

plt.xlabel("$t$")

plt.ylabel("$x(t)$")

plt.show()

The true solution is captured well.

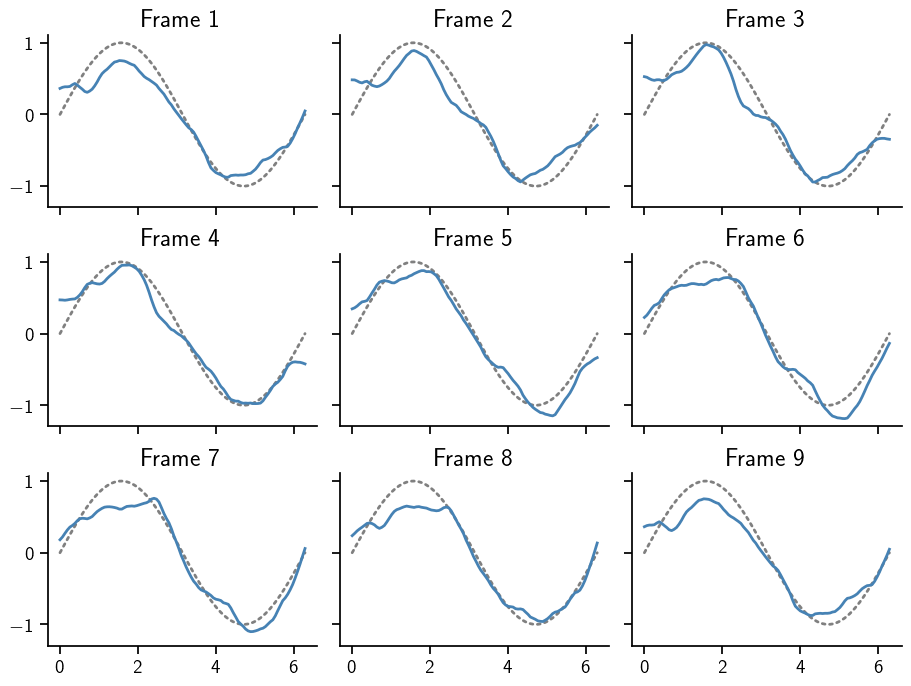

Next, we draw an animated samples on 12 frames from this posterior with the animate_with_great_circle_of_unitsphere function. Since the posterior has a 2-dimensional image, we generate 15 samples on a 200-dimensional sphere and reshape to get an (12, 100, 2) array.

[6]:

num_frames = 9

[7]:

base_measure_samples = visual.animate_with_great_circle_of_unitsphere(200, num_frames=num_frames, endpoint=True).reshape(num_frames, 100, 2)

print(base_measure_samples.shape)

(9, 100, 2)

To circumvent the posterior.sample() function, which itself draws samples from a standard Normal base measure and transforms them according to the rules specified by the posterior, we can call the transformation directly on the spherical pseudo-samples. We visualise those 15 samples in a plot of images (top left to bottom right).

[8]:

res = posterior.transform_base_measure_realizations(base_measure_samples, t=np.linspace(0., 2*np.pi, 100, endpoint=True))

[9]:

fig, axes = plt.subplots(dpi=100, ncols=3, nrows=num_frames // 3, sharey=True, sharex=True, figsize=(num_frames*1, num_frames*.75), constrained_layout=True)

for ax in axes.flatten():

ax.spines['right'].set_visible(False)

ax.spines['top'].set_visible(False)

for idx, (ress, ax) in enumerate(zip(res, axes.flatten())):

ax.plot(posterior.locations, np.sin(posterior.locations), color="gray", linestyle="dotted")

ax.plot(posterior.locations, ress @ np.eye(2, 1),)

ax.set_title(f"Frame {idx + 1}")

plt.show()

ODE Posteriors¶

The same functionality can be used to animate samples from an ODE solution. Here is how. Let us start with solving an ODE with ProbNum.

[10]:

def f(t, x):

return 5*x*(1-x)

y0 = np.array([0.1])

t0, tmax=0., 2.

[11]:

sol = pn.diffeq.probsolve_ivp(f, t0, tmax, y0, adaptive=False, step=0.1, algo_order=1)

# Some extrapolation to visualize uncertainty

t = np.union1d(np.linspace(t0, tmax + 0.5, 100), sol.locations)

solution = sol(t)

mean = solution.mean[:, 0]

std = solution.std[:, 0]

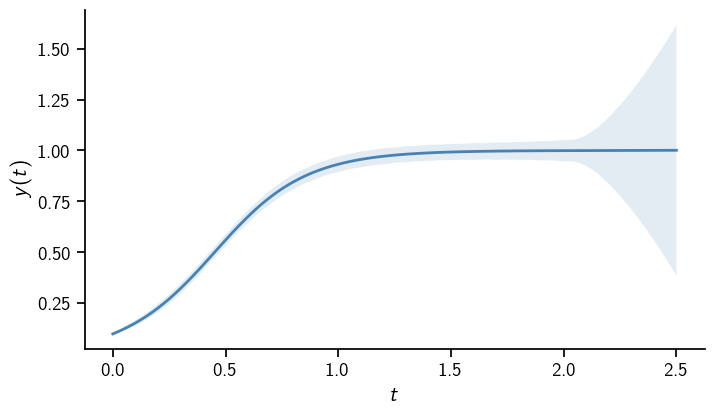

The solution is the usual logistic function. Note how the uncertainty drastically increases beyond the integration interval.

[12]:

fig, ax = plt.subplots(dpi=100)

ax.spines['right'].set_visible(False)

ax.spines['top'].set_visible(False)

ax.plot(t, mean)

ax.fill_between(t, mean - 3*std, mean + 3*std, alpha=0.15)

ax.set_xlabel("$t$")

ax.set_ylabel("$y(t)$")

plt.show()

The solution of a probabilistic ODE solver is essentially a Kalman filter posterior. It even carries one, which can be used to transform base measure realizations (and extracting the dimension of the ODE solution).

[13]:

base_measure_samples = visual.animate_with_great_circle_of_unitsphere(2*len(t), num_frames=num_frames, endpoint=True).reshape(num_frames, len(t), 2)

res = sol.kalman_posterior.transform_base_measure_realizations(base_measure_samples, t=t)[:, :, 0]

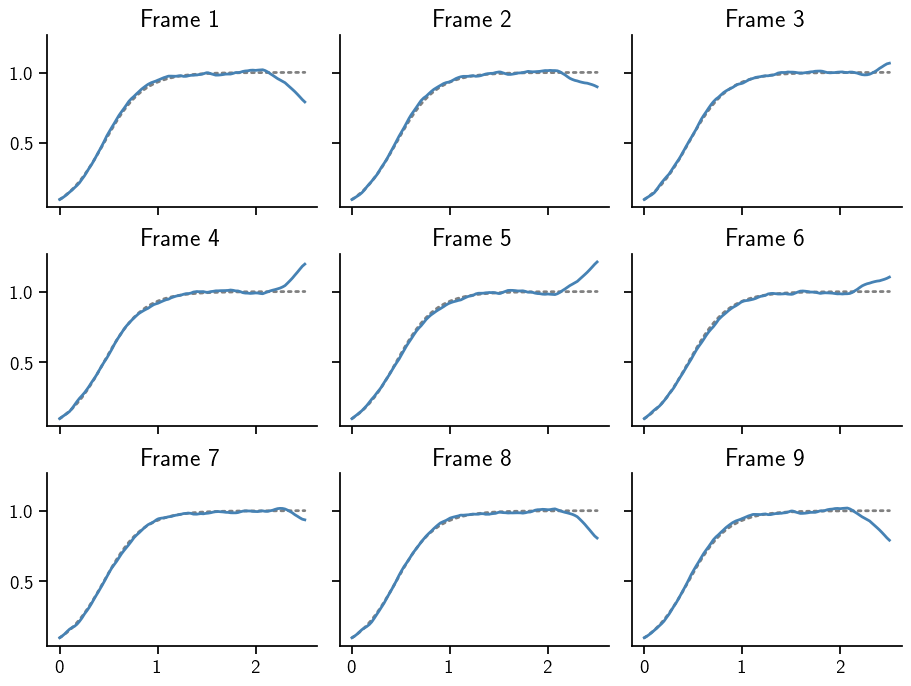

This can be plotted in the same way as before. Note how beyond t=2, the sample moves a lot more than in the rest of the domain. This aligns with the marginal uncertainties plotted above.

[14]:

fig, axes = plt.subplots(dpi=100, ncols=3, nrows=num_frames // 3, sharey=True, sharex=True, figsize=(num_frames*1, num_frames*.75), constrained_layout=True)

for ax in axes.flatten():

ax.spines['right'].set_visible(False)

ax.spines['top'].set_visible(False)

for idx, (ress, ax) in enumerate(zip(res, axes.flatten())):

ax.plot(t, mean, color="gray", linestyle="dotted")

ax.plot(t, ress)

ax.set_title(f"Frame {idx + 1}")

plt.show()

To summarize, samples from a Gauss-Markov posterior can be animated in the same way as samples from a multivariate Gaussian. This is true, because ProbNum algorithms provide a method that transforms samples of a base measure into samples from a posterior.